Created using WAN combined with Embroidery LoRA

WAN Embroidery LoRA

This project started with an interest in treating embroidery as a moving material rather than a static texture, inspired by various commercials and Houdini simulations.

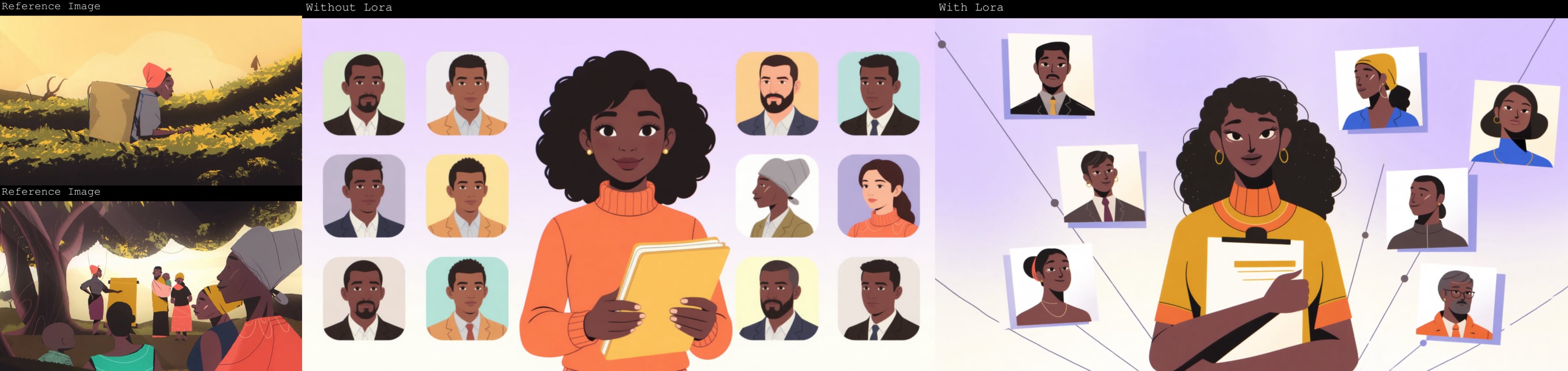

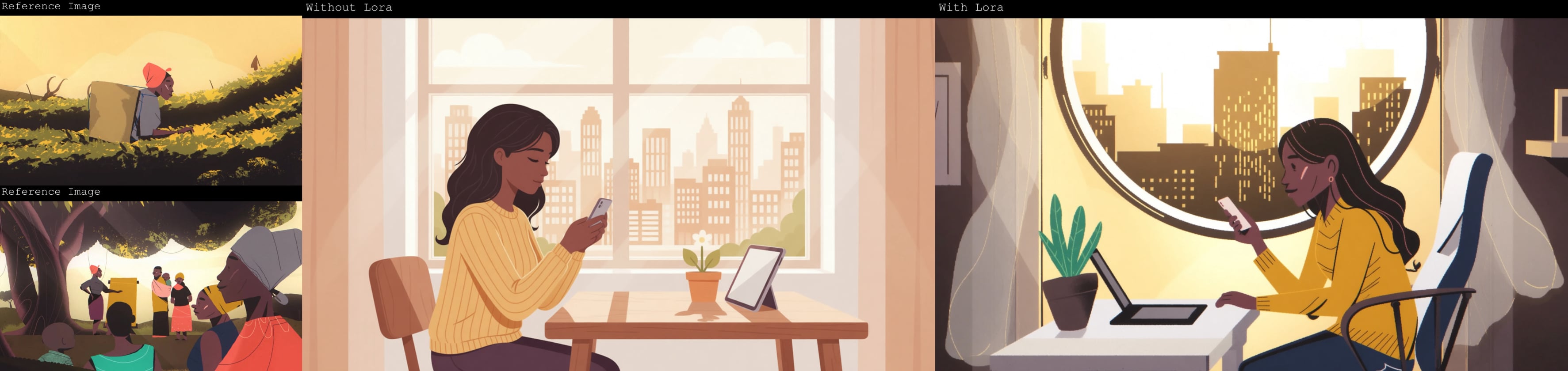

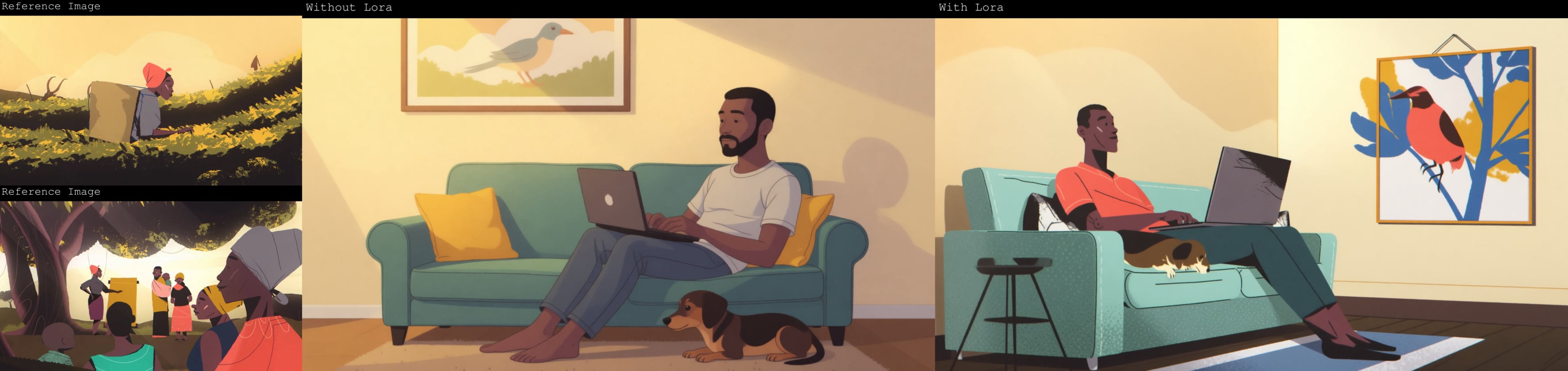

I trained a custom Embroidery LoRA to capture stitched patterns and thread animations. The goal was to preserve the tactile feel of embroidery while still allowing variation in form, movement, and composition.

LoRA was integrated in an I2V workflow, combined with Z-image and an LLM to generate Images to final videos.

Tools Used: AI toolkit, ComfyUI

Models: Wan 2.2 (Video), Z-Image Turbo (Images), Gemini 2.5 (Prompts)